Call me crusty, old-fart, unwilling to embrace change… but docker has always felt like a cop-out to me as a dev. Figure out what breaks and fix it so your app is more robust, stop being lazy.

I pretty much refuse to install any app which only ships as a docker install.

No need to reply to this, you don’t have to agree and I know the battle has been already lost. I don’t care. Hmmph.

Why put in a little effort when we can just waste a gigabyte of your hard drive instead?

I have similar feelings about how every website is now a JavaScript application.

Yeah, my time is way more valuable than a gigabyte of drive space. In what world is anyone’s not today?

It’s a gigabyte of every customer’s drive space.

The value add is even better from a customers perspective.

That I can install far less software on legacy devices because everything new is ridiculously bloated?

Don’t you get it? We’ve saved time and added some reliability to the software! It. Sure it takes 3-5x the resources it needs and costs everyone else money - WE saved time and can say it’s reliable. /S

3-5x the resources my ass.

Mine, on my 128gb dual boot laptop.

How many docker containers would you deploy on a laptop? Also 128gb is tiny even for an SSD these days .

None, in fact, because I still haven’t got in to using docker! But that is one of the factors that pushes it down the list of things to learn.

I’ve had a number of low-storage laptops, mostly on account of low budget. Ever since taking an 8GB netbook for work (and personal) in the mountains, I’ve developed space-saving strategies and habits!

I love docker… I use it at work and I use it at home.

But I don’t see much reason to use it on a laptop? It’s more of a server thing. I have no docker/podman containers running on my PCs, but I have like 40 of em on my home NAS.

Yeah, I wonder if these people are just being grumpy grognards about something they don’t at all understand? Personal computers are not the use case here.

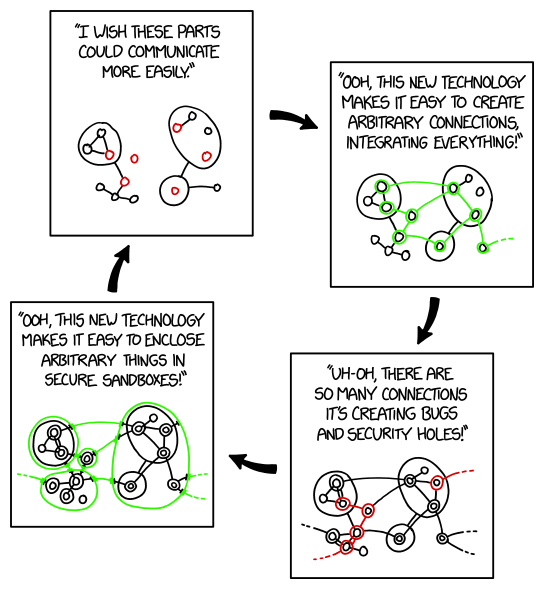

Docker is more than a cop out for than one use case. It’s a way for quickly deploy an app irrespective of an environment, so you can scale and rebuild quickly. It fixes a problem that used to be solved by VMs, so in that way it’s more efficient.

Well, nope. For example, FreeBSD doesn’t support Docker – I can’t run dockerized software “irrespective of environment”. It has to be run on one of supported platforms, which I don’t use unfortunately.

To deploy a docker container to a Windows host you first need to install a Linux virtual machine (via WSL which is using Hyper-V under the hood).

It’s basically the same process for FreeBSD (minus the optimizations), right?

Containers still need to match the host OS/architecture they are just sandboxed and layer in their own dependencies separate from the host.

But yeah you can’t run them directly. Same for Windows except I guess there are actual windows docker containers that don’t require WSL but if people actually use those it’d be news to me.

There’s also this cursed thing called Windows containers

Now let me go wash my hands, keyboard and my screen after typing that

If this is your take your exposure has been pretty limited. While I agree some devs take it to the extreme, Docker is not a cop out. It (and similar containerization platforms) are invaluable tools.

Using devcontainers (Docker containers in the IDE, basically) I’m able to get my team developing in a consistent environment in mere minutes, without needing to bother IT.

Using Docker orchestration I’m able to do a lot in prod, such as automatic scaling, continuous deployment with automated testing, and in worst case near instantaneous reverts to a previously good state.

And that’s just how I use it as a dev.

As self hosting enthusiast I can deploy new OSS projects without stepping through a lengthy install guide listing various obscure requirements, and if I did want to skip the container (which I’ve only done a few things) I can simply read the Dockerfile to figure out what I need to do instead of hoping the install guide covers all the bases.

And if I need to migrate to a new host? A few DNS updates and SCP/rsync later and I’m done.

I hate that it puts package management in Devs hands. The same Devs that usually want root access to run their application and don’t know a vulnerability scan for the life of them. So now rather than having the one up to date version of a package on my system I may have 3 different old ones with differing vulnerabilities and devs that don’t want to change it because “I need this version because it works!”

Docker or containers in general provide isolation too, not just declarative image generation, it’s all neatly packaged into one tool that isn’t that heavy on the system either, it’s not a cop out at all.

If I could choose, not for laziness, but for reproducibility and compatibility, I would only package software in 3 formats:

- Nix package

- Container image

- Flatpak

The rest of the native packaging formats are all good in their own way, but not as good. Some may have specific use cased that make them best like Appimage, soooo result…

Yeah, no universal packaging format yet

There are another important reason than most of the issues pointer out here that docker solves.

Security.

By using containerization Docker effectively creates another important barrier which is incredibly hard to escape, which is the OS (container)

If one server is running multiple Docker containers, a vulnerability in one system does not expose the others. This is a huge security improvement. Now the attacker needs to breach both the application and then break out of a container in order to directly access other parts of the host.

Also if the Docker images are big then the dev needs to select another image. You can easily have around 100MB containers now. With the “distroless” containers it is maybe down to like 30 MB if I recall correctly. Far from 1GB.

Reproducability is also huge efficiency booster. “Here run these this command and it will work perfecty on your machine” And it actually does.

It also reliably allows the opportunity to have self-healing servers, which means businesses can actually not have people available 24/7.

The use of containerization is maybe one of the greatest marvels in software dev in recent (10+) years.

Isn’t Docker massively insecure when compared to the likes of Podman, since Docker has to run as a root daemon?

I prefer Podman. But Docker can run rootless. It does run under root by default, though.

I don’t have in-depth knowledge of the differences and how big that is. So take the following with a grain of salt.

My main point is that using containerization is a huge security improvement. Podman seems to be even more secure. Calling Docker massively insecure makes it seem like something we should avoid, which takes focus away from the enormous security benefit containerization gives. I believe Docker is fine, but I do use Podman myself, but that is only because Podman desktop is free, and Docker files seem to run fine with Podman.

Edit: After reading a bit I am more convinced that the Podman way of handling it is superior, and that the improvement is big enough to recommend it over Docker in most cases.

Not only that but containers in general run on the host system’s kernel, the actual isolation of the containers is pretty minimal compared to virtual machines for example.

What exactly do you think the vm is running on if not the system kernel with potentially more layers.

Virtual machines do not use host kernel, they run full OS with kernel, cock and balls on virtualized hardware on top of the host OS.

Containers are using the host kernel and hardware without any layer of virtualization

deleted by creator

What did you deploy?

I’m messing with self-hosting a LMM with a web front end right now.

deleted by creator

Oh I’m totally getting metube. I use ytdlp with a script

deleted by creator

I said this a year and a half ago and I still haven’t, awful decision, I now own servers too so I should really learn them

Yes, yes you really should

Oof. I’m anxious that folks are going to get the wrong idea here.

While OCI does provide security benefits, it is not a part of a healthly security architecture.

If you see containers advertised on a security architecture diagram, be alarmed.

If a malicious user gets terminal access inside a container, it is nice that there’s a decent chance that they won’t get further.

But OCI was not designed to prevent malicious actors from escaping containers.

It is not safe to assume that a malicious actor inside a container will be unable to break out.

Don’t get me wrong, your point stands: Security loves it when we use containers.

I just wish folks would stop treating containers as “load bearing” in their security plans.

You don’t have to ship a second OS just to containerize your app.

Now if only Docker could solve the “hey I’m caching a layer that I think didn’t change” (Narrator: it did) problem, that even setting the “don’t fucking cache” flag doesn’t always work. So many debug issues come up when devs don’t realize this and they’re like, “but I changed the file, and the change doesn’t work!”

docker system prune -aand beat that SSD into submission until it dies, alas.